Backfilling a Real-Time Analytics Data Pipeline to Ensure Consistency and Correctness

In today’s rapidly evolving marketplace, real-time analytics have emerged as an indispensable asset for forward-thinking enterprises. By harnessing the power of instant data processing, businesses are looking to unlock actionable insights with unparalleled speed, thereby ensuring informed decision-making processes are both immediate and robust. This strategic capability proves instrumental across a myriad of operational landscapes—from preemptively identifying fraudulent activities to dynamically refining digital marketing endeavors in real time.

This movement of instant data processing is facilitated by a host of maturing streaming services available today led by Confluent (Apache Kafka) and others like it: AWS Kinesis, AWS MSK, Redpanda, Apache Pulsar, etc. While much is new and different in the modern data pipelines geared towards streaming architecture, some key components of the batch architecture are still very relevant today. One of those components is backfilling.

In this blog, we will discuss:

- What is backfilling?

- Role of backfilling in real-time analytics data pipeline

- Use cases for backfilling

- Backfilling support in StarTree

What is backfilling?

Backfilling is a term used to describe the process of retroactively introducing historical data in a data pipeline, often undertaken to ensure consistency and correctness. The reasons why we backfill data are myriad: data quality incidents, anomalies in production systems, schema migrations, and so on.

Backfilling real-time analytical systems is also done because we want to combine historical data with real-time data feeds. For example, a Kafka pipeline may only keep the most recent week or month’s worth of data. If you wanted to upload data from a further out time window, you would have to backfill the rest of that data. In Apache Pinot, this is easily accomplished because it supports hybrid tables: combinations of real-time data and historical batch data. Users can set time boundaries for when data is split between its real-time component and its batch (or “offline”) component.

Backfilling can be considered a consequence of bad planning/foresight and/or required due to some type of system failure. However, in the agile world of application development, backfilling is the secret weapon which allows developers to iterate faster. Knowing they can change the data structure as needed in the future based on user feedback is liberating and allows organizations to adapt to customers’ needs promptly.

In cases where the chosen data platform lacks efficient backfilling support, the only recourse is to re-ingest the entire dataset, a costly and time-intensive process that often hinders experimentation. Fortunately, StarTree Cloud, powered by Apache Pinot, has robust support for backfilling.

Do we need backfilling in the streaming data pipeline?

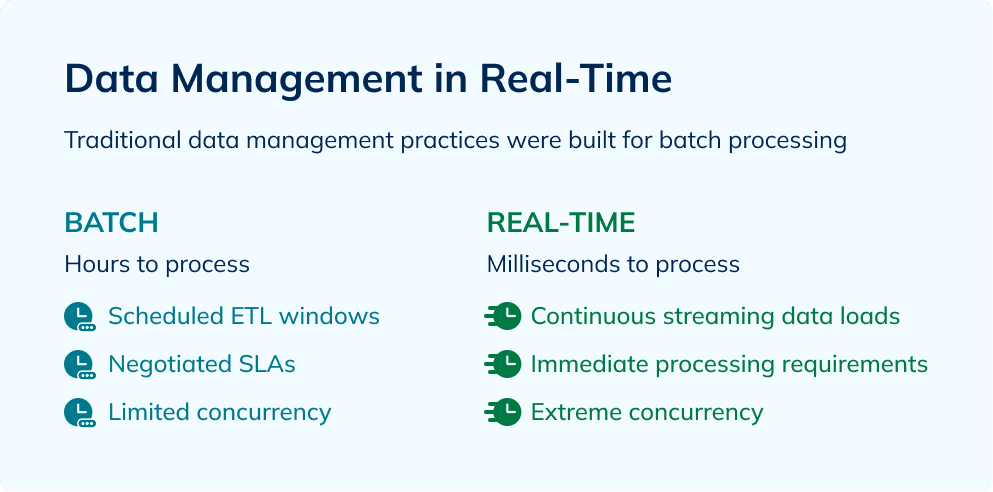

There are a host of maturing streaming services available today led by Confluent (Apache Kafka) and others like it: AWS Kinesis, AWS MSK, Redpanda, Apache Pulsar, etc. While much is new and different in the modern data pipelines geared towards streaming architecture, some key components of the batch architecture are still very relevant today — such as backfilling.

In the early stages of adopting the streaming data pipeline, data engineers often utilized the Lambda architecture. This involved using a streaming pipeline for real-time event responses, while a batch pipeline handled operational and ad-hoc analytics.

In this architecture, the real-time layer prioritizes latency of response, but it doesn’t guarantee correctness or completeness. Before the broad adoption of stream processing, there was minimal complex transformation before data reached the real-time layer, limiting its applicability to rule-based tasks like fraud detection.

With the advancement of streaming data pipeline technology, data engineers now have the capability to employ sophisticated data modeling and create optimal data structures for large-scale user-facing analytics applications. While smaller datasets may not necessitate a backfilling strategy, it becomes absolutely critical for applications dealing with billions to trillions of records.

As streaming data pipelines are being deployed to serve revenue impacting applications, it’s imperative that we consider the implementation of an efficient backfilling strategy, especially for applications handling extensive datasets. This will ensure accuracy, completeness, and optimal performance for our user-facing analytics.

When do we need backfilling?

There are several scenarios where a data engineer would need to backfill data in a streaming data pipeline.

1. Handling system failures and downtime: Real-time OLAP systems, while highly efficient, are also prone to occasional disruptions due to failures or maintenance activities. These events may lead to temporary interruptions in data processing or real-time ingestion. Fortunately, in many cases, these hiccups are swiftly identified and resolved, allowing the data pipeline to seamlessly regain its flow without requiring external intervention.

However, when immediate recovery is not possible, a recommended approach is backfilling. That is if your real-time OLAP system supports it. Backfilling is a crucial mechanism that ensures no vital information is lost during these downtime periods. It acts as a safety net, enabling the system to recuperate any missed data once it is back online, thereby safeguarding the integrity of critical information. This practice plays a pivotal role in maintaining data consistency and reliability in real-time OLAP systems.

2. Bug in an ETL pipeline: ETL workflows typically involve complex transformations and huge volumes of data. The complexity of an ETL pipeline is directly proportional to the number of data transformation and business rules integrated into the process. These rules are important to ensure that applications leveraging the data for analytics are working with accurate, comprehensive, and relevant data.

Any identified bug in the pipeline will require reprocessing the data affected by the bug once it has been addressed. Backfilling serves as a crucial step to ensure that all data inconsistencies or gaps created by the bug are rectified.

3. Change in business logic or metric: This is one of the most common and important reasons for backfilling in data management. Changes in business logic or metrics are often a result of evolving business strategies, regulatory requirements, or insights gained from data analytics. When such changes occur, it is essential to apply the new logic or metrics retroactively to historical data to maintain consistency across reports and analytics.

Backfilling enables organizations to reprocess historical data through updated business rules or metrics, ensuring that all data reflects the most current understanding of the business. This is crucial for accurate decision-making and maintaining trust in data reports among stakeholders.

4. Late arriving data: Late arriving data refers to data that does not arrive in time to be processed in its expected order within the data pipeline. This can happen for various reasons, such as delays in data extraction from source systems, network issues, or batch processing of data at the source. In real-time OLAP systems, late arriving data can disrupt the chronological order of data processing, leading to inaccuracies in analytics and reporting.

Backfilling is essential in handling late arriving data. It allows data engineers to insert or update records in their proper place within the dataset, ensuring that the data maintains its chronological integrity. This process is critical for time-sensitive analytics and reporting, where the accuracy of data sequencing directly impacts the insights derived from the data.

5. Schema changes: Another critical use case for backfilling is during the migration to a new data platform or when making schema changes. Such migrations or changes often require a comprehensive review and transformation of existing data to fit the new environment or schema requirements. Backfilling ensures that historical data is compatible with the new system’s requirements, allowing for seamless integration and continued access to historical insights without data loss or inconsistency.

StarTree supports backfilling natively

Due to its inherent flexible architecture, StarTree supports backfilling even for a table created from a real-time streaming data source. Backfilling in StarTree allows users to update historical data, which is crucial for correcting inaccuracies, adding new information, or reflecting changes in data classification standards.

Unlike traditional databases where backfilling can be a resource-intensive and time-consuming process, StarTree leverages Minions which allows it to backfill data without any impact on applications running on the StarTree cluster. Minions is built to leverage cloud elasticity, making it highly efficient and cost effective to not only backfill data but also ingestion in general. To learn more about minions and batch ingestion, read this blog.

Key features

StarTree customers have been able to simplify their data pipeline by leveraging the following features which are the building blocks for the rich backfilling capabilities provided by StarTree:

- Segment Replacement: Apache Pinot manages data in segments, which are individual units of data storage. By dividing data into manageable segments, Pinot allows for efficient updates or corrections by replacing entire segments of data rather than individual records. This innovative approach minimizes the performance impact typically associated with data modifications, ensuring continuous, uninterrupted access to analytics. For backfilling, Pinot allows the replacement of existing segments with new ones that contain the updated data.

- Atomic Updates: Atomic updates in Apache Pinot represent a critical mechanism that guarantees seamless and instantaneous updates or replacements of data without compromising the system’s availability or query accuracy. This feature ensures that the transition between old and updated data is handled with precision, allowing no room for error or data inconsistency. Atomic updates are pivotal for maintaining the integrity and continuity of real-time analytics, offering businesses a reliable way to keep their data landscapes both current and accurate.

- Support for Batch and Stream Data: Pinot supports backfilling for both batch data sources and real-time streams. Users can backfill large volumes of historical data efficiently and it is crucial for integrating legacy data or conducting comprehensive analysis over long time periods. The platform’s design facilitates rapid ingestion of batch data allowing businesses to backfill historical data stored in batch files and combine it with data ingested in real time from streaming sources, ensuring comprehensive data accuracy and integrity.

- Retention Policies: Pinot’s backfilling capabilities are complemented by its data retention policies, which allow for the automatic expiration and removal of outdated data segments. This feature ensures that only the most relevant and accurate data is retained for querying, optimizing storage usage and query performance.

Conclusion

In conclusion, backfilling plays a pivotal role in maintaining the consistency, correctness, and adaptability of real-time analytics data pipelines. By addressing scenarios such as system failures, schema changes, or evolving business logic, backfilling ensures that historical data remains accurate and aligned with real-time insights. StarTree, with its robust features like segment replacement, atomic updates, and support for both batch and streaming data, provides a seamless and efficient approach to backfilling. This capability not only safeguards data quality but also empowers businesses to make reliable, timely decisions based on comprehensive data, reinforcing the strategic value of real-time analytics.

Ready to take StarTree Cloud for a spin? Get started immediately in your own fully-managed serverless environment with StarTree Cloud Free Tier. If you have specific needs or want to speak with a member of our team, you can book a demo.